Before importing data, you need to make sure you have quality data in your base. Cleaning your data is actually much easier than you might think. While importing quality data is a time-consuming process, there’s no magic way to ensure that you only import good data that can be used right away by all employees.

Tips for Catching Bad Data

If you know how to program, you can write scripts that find errors and duplicates and eliminate them. If you don’t know how to code, you can clean the data manually, and you will get better results. Why? Because you can spot a problem more easily than the computer.

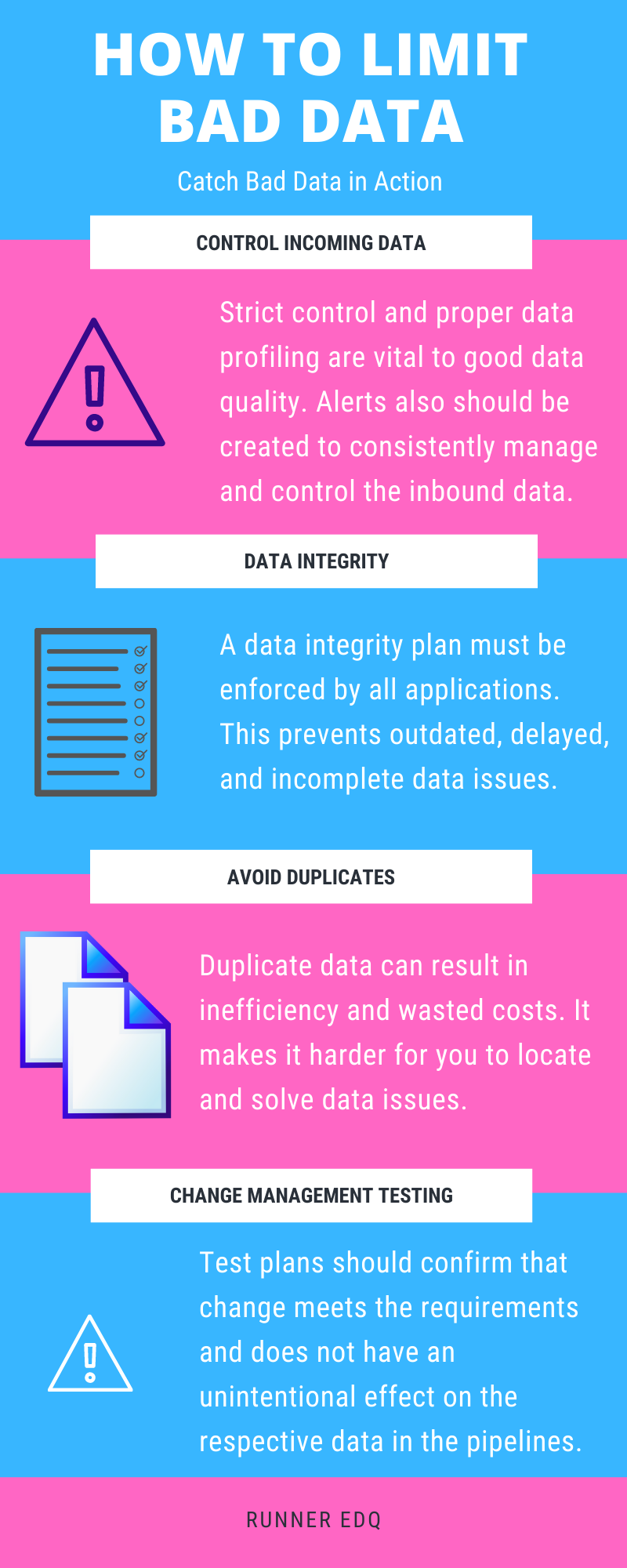

Control of Incoming Data

Usually, bad quality data comes from data receiving. In a company, the data usually comes from outside sources. It could be the data from a third-party software or from another organization. Therefore, the data quality cannot be guaranteed, and strict control is the most important part of all data quality control tasks. A proper data profiling tool should examine the following aspects:

- Data and data patterns and formats

- Data completeness

- Data consistency on every record

- Data value abnormalities

It is also necessary to automate the data and data quality and profiling alerts so that the quality of incoming data is constantly controlled and managed when it gets received. Also, each piece of data should be managed using the same best practices and standards, and a centralized catalog and KPI dashboard should be used to accurately determine and monitor the quality of the data.

Data Integrity

When the amount of data grows, along with more and more deliverables and sources, not all datasets can exist in a single database system. The referential data integrity has to be enforced by processes and applications. Without the plan of enforcing integrity in the first place, the data could become outdated, delayed, or incomplete, which can lead to serious data quality issues.

Avoid Duplicate Data

When duplicate data comes up, it is probably out of sync and will lead to different results, with further effects throughout multiple databases and systems. Then, when a data issue appears, it will be difficult to find the root cause and fix it.

Automated Regression Testing for Change Management

Obviously, data quality issues often happen when a new dataset is included, or an existing dataset gets modified. For successful change management, test plans should be performed with two themes:

- Confirming that change meets the requirements

- Making sure the change does not have an unintentional effect on the data in the pipelines that should not be altered.

When a change happens, for mission-critical datasets, regular regression testing must be implemented for every deliverable, and comparisons should be conducted for every row and field of a dataset. With the quick progress of technologies in big data, system migration usually occurs in a few years. Automated regression tests, together with thorough data comparisons, is necessary to make sure good data quality is consistently maintained.